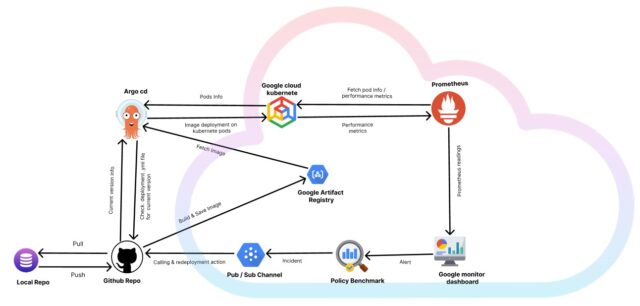

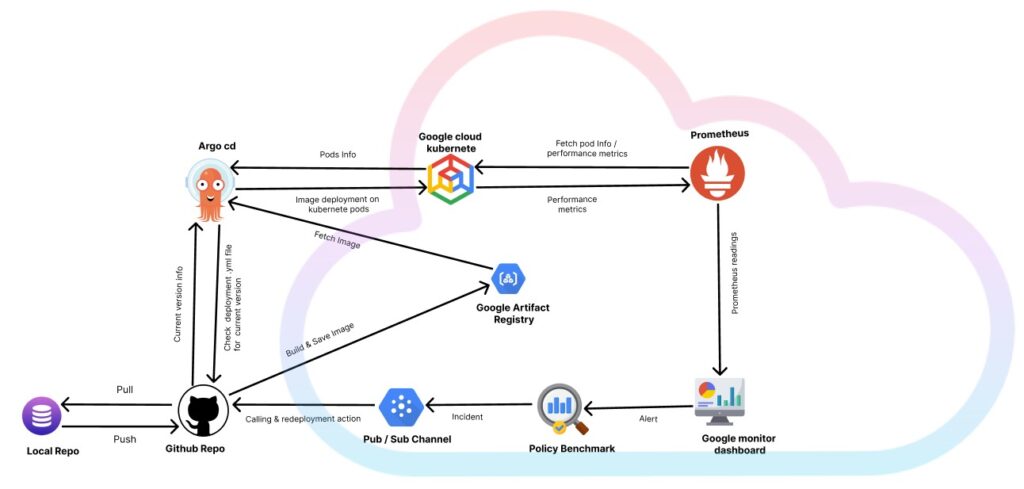

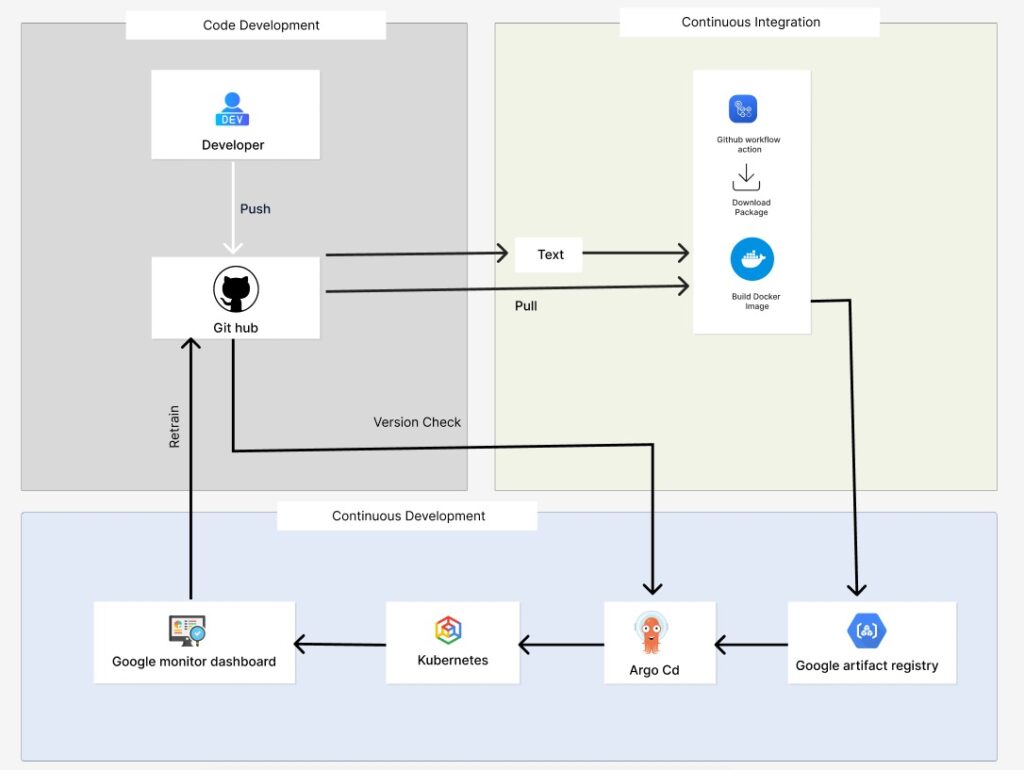

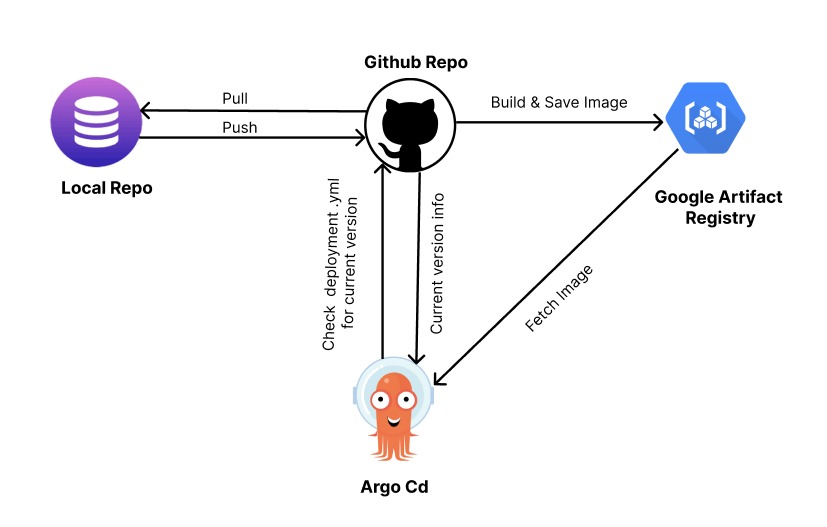

This project delivers an end-to-end automated MLOps–GitOps pipeline for a housing‐price prediction service: a Decision Tree model is trained in Python and packaged into a FastAPI application, which is containerized via Docker and pushed to Google Artifact Registry. A GitHub Actions workflow builds the Docker image (tagged by run number), updates the Kubernetes Deployment manifest in Git, and ArgoCD automatically syncs those changes to a three-node GKE cluster—ensuring zero-touch rollouts and self-healing via liveness/readiness probes. Meanwhile, the model’s performance is continuously monitored (using Prometheus metrics for request count, latency, and accuracy), and when accuracy falls below a threshold, a retraining workflow is triggered that commits the new model artifact back to Git. This, in turn, dispatches the CI/CD pipeline again, rebuilding and redeploying the updated container—all under GitOps control for full auditability and resilience.

Technologies used:

- Languages & Frameworks: Python, FastAPI, Pydantic, pandas, scikit-learn, joblib, uvicorn

- Containerization: Docker, Docker Desktop, Dockerfile

- CI/CD & Automation: GitHub Actions (build-and-update, retrain workflows), repository_dispatch

- Cloud & Container Registry: Google Cloud (GCP), GKE, Artifact Registry, gcloud CLI

- Orchestration & GitOps: Kubernetes (Deployment, Service, CronJob), ArgoCD

- Experiment Tracking & Metrics: MLflow, prometheus_client (Counter, Histogram, Gauge)

- Version Control & GitOps Triggers: Git, branch protection, semantic tagging, GitHub Secrets (GCP_SA_KEY, GITHUB_TOKEN, PUSH_TOKEN)